How to effectively process millions of vaccination registrations

The challenge

On January 15th 2021, mass enrollment for vaccinations in Poland are supposed to start. They will be handled - among other channels - through the internet. During a conversation with a friend, I’ve heard the opinion that there is no way that the service currently designated to handle this traffic will work without any issues. After all, it will receive millions of requests.

I have the opposite opinion. It can be designed to serve this traffic effectively both in terms of performance and cost. Although the final service probably already exists - hopefully it will work out well - but as a thought exercise, I considered how I would design it.

This article was published originally at How to effectively process milions vaccination registrations .

Keep in mind the article’s date - it was published during the first COVID outbreak.

Let’s make some assumptions and try to select the best design:

- Suppose that half of Poland’s population would try to register via the internet. That’s 19 million registrations.

- Let’s suppose that on the first hour of registration we would experience a peak of 20,000 registrations per second, and the service needs to survive that.

- Let’s also assume that for each citizen it will be necessary to save 4kB of data and that it will be necessary to verify this information with external government systems.

First of all, what I would not do:

- I would not design the service as a Wordpress plugin, hoping that it would handle all the traffic

- I would not put the service on a single server

- I even wouldn’t consider hosting it within one data center. The service need to be available from at least 2 or 3 locations.

Solution proposal

I would use a cloud provider and services that are designed to handle such volume of traffic.

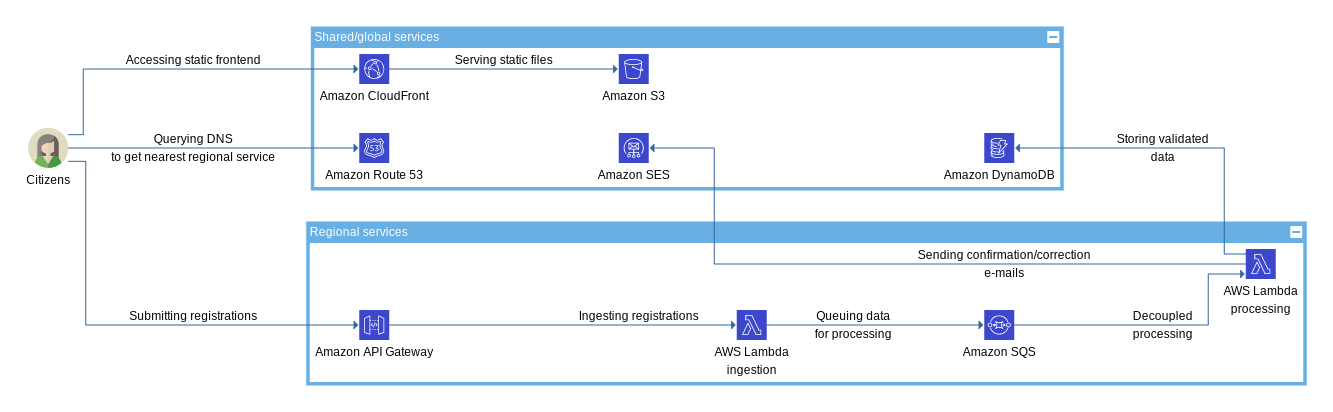

For the frontend, I would use a static HTML website hosted on AWS S3 and served by AWS CloudFront. In this configuration, it will be capable of handling virtually any traffic. This is the easier part.

Let’s look at the backend side. To process registration requests, I would use AWS API Gateway (which supports 10,000 queries per seconds) and a Lambda underneath to write received data (where? in a moment!).

A serverless registration process could be easily deployed to multiple regions, satisfying our requirements for high availability and even giving us the possibility of handling even higher request counts. Also, there are multiple AWS Regions within European Union, as it may be required to process and store personally identifiable information (PII).

However, a key challenge would be in data verification with external government services. Not knowing their performance, it would be the safest to assume that it won’t be enough and just design a decoupled, asynchronous system: let the request collection happen completely separately from verification and returning a confirmations/calls to fill the gaps.

The SQS queue - a reasonable default solution - will be great as a transient data store. It does support virtually unlimited throughput and additionally can function as an event source for Lambda - one that will be able to contact external services, perform data verification and perform required actions.

AWS DynamoDB can be used as a final data store. It can easily handle tens of thousands of operations per second, which is more than enough as the process will be already asynchronous.

It’s worth mentioning that all of the mentioned services support encryption both in-transit and at-rest to satisfy all requirements of PII protection. Even more - they are passing the requirements for HIPAA compliance in regards to the storing and processing of medical information. While these requirements aren’t necessary in Europe, it’s still nice to meet them without additional costs.

After combining it all together, it looks like this:

For the sake of brevity, “Regional services” are displayed only once but they should be duplicated or triplicated in different EU regions.

Cost calculations

Let’s also make a back-of-a-napkin cost estimation:

- API Gateway - 19 million requests to process - 22.80 USD.

- Ingestion Lambda - assuming 19 million requests to process, a generous 500ms per request and 256MB of memory - 43.38 USD

- SQS - 19 million standard messages - 7.60 USD

- Processing Lambda - the same number of messages, a little bit more time to process (1,000ms) and generous 512MB of memory to parse those pesky XMLs - 155.27 USD

- DynamoDB storage: 19 million citizens times 4kB of data = 76GB = 23.26 USD

- DynamoDB processing: Baseline 100 write op/s, peak 1000 write op/s, all transactional = 137.37 USD

Total: 390 USD for data ingestion.

Well, it’s not much considering the amount of data processed and ability to safely ingest requests at a peak rate of tens of thousand requests per second.

What do you think about it? Would you implement it differently or more effectively?